Kubernetes AppOps Security Part 1: Using Network Policies (1/2) – Basics and Good Practices

The Kubernetes network model may seem unusual to many: It requires a flat, non-hierarchical network in which everything (nodes, pods, kubelets, etc.) can communicate with everything else. The traffic in the cluster is not restricted by default, including even between namespaces. This is the point when traditional sysadmins ask in vain about network segments and firewalls.

But Kubernetes offers the network policy resource that can be used to restrict network connections. The rules for incoming and outgoing traffic are declared in a network policy for ISO/OSI layers 3 and 4 (IP addresses and ports), and these rules are assigned to a group of pods using labels. The rules are then queried from the Kubernetes API and enforced by the Container Networking Interface (CNI) plugin (e.g., Calico, Cilium).

So, pod traffic is not restricted if

- no network policies exist

- the pod has no label that matches a network policy, or

- the utilized CNI plugin does not implement network policies

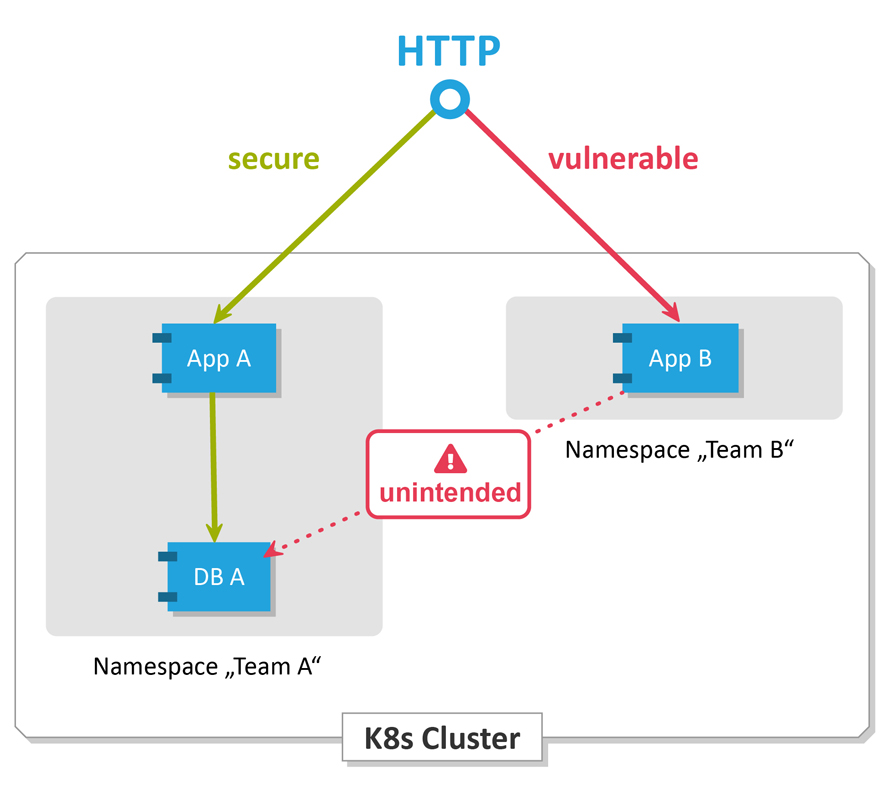

This default behavior is not ideal in terms of security. In particular, when multiple applications, teams or clients are running on one cluster, a single vulnerable application can provide an opening for attacks. Figure 1 provides an example: An attacker uses the vulnerable application for ”Team B” to obtain customer data from the ”Team A” database. Although it is not directly accessible via the internet, it is misconfigured and requires no authentication. Cases like these are not academic! For example, MongoDB instances have been operated thousands of times without authentication and were directly accessible via the internet.

Also, outgoing traffic can present a threat. For example, a compromised host might try to connect to a command server. This can be mitigated by blocking outgoing connections.

General capabilities and syntax

Network policies allow you to restrict traffic and thereby limit the impact of an attack. This allows inbound (ingress) and/or outbound (egress) traffic to be allowed for specific pods, entire namespaces or IP address ranges (egress only). Optionally, traffic can also be restricted by port.

As is customary with Kubernetes, the desired state of the cluster is defined declaratively in YAML. A simple example of a network policy is shown in Listing 1. The assignment of the pods is loosely tied to the respective label. This applies both to the pods to which the network policy is applied (podSelector) as well as to the specification of rules (which is below podSelector in the example, starting after ingress).

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: db-allow

namespace: team-a

spec:

podSelector:

matchLabels:

db: a

ingress:

- from:

- podSelector:

matchLabels:

app: a

Listing 1: Simple network policy that prevents unwanted access from Figure 1

Since data traffic within a cluster is completely unrestricted without network policies, a network policy must first be applied that prevents all connections (deny-all policy, see Listing 2). Then the desired connections can be allowed via further network policies (whitelisting). The network policy in Listing 1 applies to all pods with the label ”db: a”, and it only allows inbound traffic from pods that have the label “app: a”. In general, network policies apply to pods within the namespace in which they are applied (in Listing 1, “team-a”). Nevertheless, it is possible to specify ingress or egress rules that are applied to other namespaces (you can learn more about this in the next section).

At the beginning it takes a little bit of time to get used to reading the syntax. “Network policy recipes” will help introduce you to the topic: Here, network policy examples ranging from simple to complex use cases are vividly explained using animated GIFs.

Protecting individual pods as shown in Listing 1 is a start. But how can we ensure that no unwanted network accesses occur in larger clusters and complex application landscapes? One way to solve this problem will be shown below by way of the following examples. If you want to try it yourself in a defined environment, you will find complete examples with instructions in the “cloudogu/k8s-security-demos” repository on GitHub.

With network policies you can generally allow or prohibit incoming and outgoing network traffic (ingress, egress). The question here is where to start. A sensible first step is to whitelist incoming traffic from applications. In later steps, the book describes the restriction of outgoing traffic and special namespaces such as kube-system and operator namespaces.

Whitelisting of incoming traffic

A sensible first step is the whitelisting of incoming traffic.

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: ingress-default-deny-all

namespace: team-a

spec:

podSelector: {}

ingress: []

Listing 2: Network policy that prohibits all communication with pods in a namespace

This can be implemented using a network policy for each namespace, which selects all pods from a namespace and blocks all incoming traffic. Listing 2 shows how it works: All pods are selected, but no allowed ingress rules are described. After that, pods within the namespace may not be accessed unless explicitly allowed, as Listing 1 shows.

If this is applied to each namespace (except initially for kube-system; see the next section for more information), only explicitly allowed connections between pods in the cluster can be established. This method produces a great effect with a manageable amount of effort.

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: ingress-allow-traefik-to-access-app-a

namespace: team-a

spec:

podSelector:

matchLabels:

app: a

ingress:

- ports:

- port: 8080

from:

- namespaceSelector:

matchLabels:

namespace: kube-system

podSelector:

matchLabels:

app: traefik

Listing 3: Network policy that allows inbound traffic from the ingress controller

However, what should not be forgotten is the whitelisting of traffic from

- the ingress controller (for example NGINX or Traefik), since no further incoming requests can be made (Listing 3 demonstrates whitelisting for Traefik), and

- the observability infrastructure, since otherwise Prometheus (Listing 4) will stop collecting application metrics, for example.

When selecting namespaces, please note that they have no labels by default. For example, to access listing 3, you need to add a namespace: kube-system label to the kube-system namespace. The advantage of assigning labels instead of names is that a label can also be assigned to multiple namespaces. This makes it possible, for example, to apply a network policy to all productive namespaces.

The simultaneous use of namespaceSelector and podSelector, as used in Listing 3 and 4, is only possible starting with Kubernetes version 1.11.

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: ingress-allow-prometheus-to-access-db-a

namespace: production

spec:

podSelector:

matchLabels:

db: a

ingress:

- ports:

- port: 9080

from:

- namespaceSelector:

matchLabels:

namespace: monitoring

podSelector:

matchLabels:

app: prometheus

component: server

Listing 4: Network policy that allows Prometheus to monitor an application

For advanced users: the kube-system namespace

As mentioned, there is more to consider when whitelisting Kubernetes’ own kube-system namespace and any other operator namespaces that may exist (Ingress controller, Prometheus, Velero, and others) than other namespaces where common applications run.

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: allow-kube-dns-all-namespaces

namespace: kube-system

spec:

podSelector:

matchLabels:

k8s-app: kube-dns

ingress:

- ports:

- protocol: UDP

port: 53

from:

- namespaceSelector: {}

Listing 5: Network policy that allows the DNS service of kube-dns to be accessed

If any incoming traffic in the kube-system namespace is disallowed, this will affect, among other things:

- name resolution with the DNS addon (for example, kube-dns)

- and incoming traffic from the outside to the ingress controller, which is often operated in this namespace.

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: ingress-allow-traefik-external

namespace: kube-system

spec:

podSelector:

matchLabels:

app: traefik

ingress:

- ports:

- port: 80

- port: 443

from: []

Listing 6: Network policy that allows HTTP access to the Traefik ingress controller

Listing 5 shows how DNS access to the kube-dns pods can generally be authorized: UDP traffic is allowed on port 53 from all namespaces to the pods. Listing 6 shows an example of a Traefik HTTP reverse proxy, where accesses on ports 80 (this traffic is usually redirected to port 443) and 443 are allowed without needing to specify a concrete from.

For advanced users: Restricting outgoing traffic

If incoming traffic is whitelisted in all namespaces, unauthorized access can no longer take place within the cluster: The outbound traffic of a pod is also the inbound traffic of another pod that is whitelisted. However, there is yet another source of outgoing traffic that requires a closer look: Traffic from the cluster to surrounding networks, such as the internet or corporate network. Many applications do not need to communicate with the world outside the cluster, or they only need to communicate with selected hosts. Examples of permitted accesses are the loading of plugins (Jenkins, SonarQube, etc.) or access to authentication infrastructure (OAuth providers such as Facebook, GitHub, etc. or corporate LDAP/Active Directory). As described above, attackers can exploit an internet connection or access to a corporate network, which can be used as a gateway to other targets. These attacks can be prevented since Kubernetes version 1.8 through the restriction of egress traffic.

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: egress-allow-internal-only

namespace: team-a

spec:

policyTypes:

- Egress

podSelector: {}

egress:

- to:

- namespaceSelector: {}

Listing 7: Network policy that allows outgoing traffic only within the cluster

Again, it is possible to apply the whitelisting procedure that we are familiar with from ingress. However, in that case all ingress rules would have to be repeated for the internal egress traffic. It is therefore more pragmatic to only allow outgoing traffic within the cluster with one network policy per namespace and then allow individual pods to access the outside. Listing 7 shows a network policy that does this by applying it to all pods and allowing egress to all namespaces. Everything outside the pod network has no namespace and is therefore rejected.

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: egress-allow-all-egress

namespace: team-a

spec:

policyTypes:

- Egress

podSelector:

matchLabels:

app: a

egress:

- to:

- ipBlock:

cidr: 0.0.0.0/0

Listing 8: Network policy that allows all outbound traffic for specific pods

Whitelisting then takes place in a way that is similar to the ingress through additional network policies. However, the egress applies to IP address blocks using Classless Inter-Domain Routing (CIDR) notation. An example that allows all traffic for the specific pods is shown in Listing 8. Here you can make even more granular adjustments by allowing selected IP addresses or ranges.

When whitelisting the egress, it is important to not forget cloud-native applications that speak directly to the Kubernetes API. The IP matching of the API server is challenging. Here, the IP address of the endpoint and not that of the service must be specified. This IP address can be obtained with the following command: “kubectl get endpoints –namespace default kubernetes”. The obtained IP address can be put into a network policy like in Listing 8 (x.x.x.x/32 instead of 0.0.0.0/0) to allow access to the API server.

Conclusion and recommendations

This article describes how standard Kubernetes network policies can be deployed with a manageable amount of effort to establish a solid layer of protection for the cluster.

However, the learning curve is not flat. Therefore, if you want to increase security with minimal effort, you should at least whitelist the ingress traffic in all namespaces except for the kube-system. This allows the namespaces to be isolated to a certain degree. In addition, it provides a good overview of who is communicating with whom in the cluster. Although network policies for the kube-system namespace and egress traffic are more complex, they improve security even more and are therefore also recommended.

For trying this out on your own, the “cloudogu/k8s-security-demos” repository offers an approach in which a cluster is protected step by step using the procedure described in this article.

Of course, there are pitfalls when using network policies, and we will provide some practical tips on testing and debugging in the next article of this series.

Download this article (PDF)

You can download the original article (German), published in JavaSPEKTRUM 05/2019.

This article is part 1 of the series „Kubernetes AppOps Security“.

Read all articles now: