Kubernetes AppOps Security Part 6: Pod Security Policies (2/2) - Exceptions and Troubleshooting

This article is part 6 of the series „Kubernetes AppOps Security“

Read the first part now.

The previous article in this series shows how a good practice for configuring security settings in Kubernetes can be implemented cluster-wide using Pod Security Policies (PSP). Building on this, this article shows when several PSPs can make sense and how you can handle them. It also provides troubleshooting tips.

In addition, the examples highlighted in this article can be tried out in a defined environment. You will find complete examples with instructions in the “cloudogu/k8s-security-demos” repository on GitHub.

One or several PSPs?

In general, we recommend the approach of only defining one cluster-wide, restrictive PSP to which all pods comply. This PSP makes sure that all pods run with the optimized security settings. However, we still must overcome the challenges described in the previous articles: many applications cannot easily be executed with these settings. For example, because they can only be started as the “root” user or because they write to the file system or they need certain Linux capabilities. These issues are usually resolved easily for your own applications (Security Context – Teil 1: Good Practices). However, they can present a more challenging situation for third-party applications that you need to operate in a cluster. This applies to many standard images available from DockerHub, such as “mongo”, “postgres”, or “nginx”. One solution can be to use a trustworthy, security-optimized image. In the specific case of “nginx”, the maintainer even offers one: “nginx-unprivileged”. Bitnami offers such open-source images for many well-known open source products. These are also often used in the packages (charts) of Helm, the package manager for Kubernetes. Another alternative is to create your own image that meets your requirements. However, this requires additional effort on your part.

Using Helm charts often presents its own challenges. Two examples: A PSP disables all capabilities by default. If a Helm chart now needs a certain capability, it would have to be added in the SecurityContext of the affected container. In order to make sure that this can be done through the abstraction of the Helm chart, the chart must allow the SecurityContext to be changed through “values.yaml”.

It is the same when a volume and volume mount have to be added for read-only file systems. If no such options are available, then you can extend Helm charts yourself. They are open source and essentially Kubernetes YAML files, which means that they can be easily adapted to your own needs using a pull request. A local copy of the chart can be used until the pull request is accepted. After the pull request is accepted, you can switch back to the remote chart. The whole process is by no means impossible, but it does require more work on your part.

Depending on how the image is built and whether pull requests are accepted, it may be that these proposed solutions will not produce the desired result or only with a great deal of effort. In this case, there is another solution: Run the Pod with less restrictive security settings. These are described in additional PSPs.

Defining more permissive PSPs for each pod

If a pod, or more precisely its service account, is authorized to use several PSPs, the PSP Admission Controller will proceed as follows: The first of the PSPs sorted by name that allows the pod is used (Kubernetes Docs: Pod Security Policies). This shows that it is possible to override a restrictive PSP that is enabled cluster-wide for all pods with more permissive PSPs for individual pods.

But how is such a PSP created and only enabled for individual pods? First, of course, we need the PSP itself. Here it is advisable to duplicate the restrictive PSP (see, for example, Pod Security Policies Teil 1) and to adjust the respective value or values that should be more permissive.

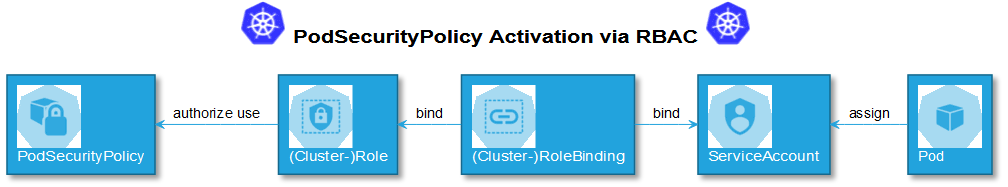

This PSP is then activated as usual via Role Based Access Control (RBAC). However, the use of this PSP is not permitted for all service accounts as in the previous article, but only for a specific one. In this case, it is recommended to limit it to a namespace by using “Role” and “RoleBinding” instead of “ClusterRole” and “ClusterRoleBinding”. In order to ensure that a pod can use the PSP, the service account is assigned to it.

It is advisable to implement the conversion using RBAC in YAML so that it can be placed under source code management. However, experience shows that it is fastest to generate YAML. Listing 1 shows how a PSP with the name “privileged” is assigned to a service account with the name “privileged” in the namespace “default”. Please note: With “RoleBinding”, the namespace must be specified twice, once for the “RoleBinding” resource and once when referencing the service account. It is also possible to use a cluster-wide defined role (“ClusterRole”) in several namespaces. To do this, you would then use “--clusterrole” while creating the “rolebinding”. This eliminates the need to redundantly maintain more permissive PSPs that are used in several namespaces. Listing 2 shows the YAML representation.

kubectl create serviceaccount privileged \

--namespace=default \

--dry-run -o yaml

kubectl create role psp:privileged \

--verb=use \

--resource=podsecuritypolicy \

--resource-name=privileged \

--namespace=default \

--dry-run -o yaml

kubectl create rolebinding psp:privileged \

--role=psp:privileged \

--serviceaccount default:privileged \

--namespace=default \

--dry-run -o yaml

Listing 1: Script to generate YAML that enables a PSP cluster-wide for the service account in the namespace via RBAC

apiVersion: v1

kind: ServiceAccount

metadata:

name: privileged

namespace: default

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: psp:privileged

rules:

- resourceNames:

- privileged

resources:

- podsecuritypolicies

verbs:

- use

apiGroups:

- extensions

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: psp:privileged

namespace: default

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: psp:privileged

subjects:

- kind: ServiceAccount

name: privileged

namespace: default

Listing 2: Role and RoleBinding that allow the use of PSP

Finally, the authorized service account is assigned to a pod. The connection can be established directly in the pod (typically using a template in a higher-level controller, such as Deployment, StatefulSet, etc.) using the “serviceAccountName” field. Listing 3 provides an example: Without the field, the pod will start with the “default” service account that exists in every namespace.

apiVersion: v1

kind: Pod

# ...

spec:

serviceAccountName: privileged

containers:

# ...

Listing 3: Linking a pod to a service account

If the PSP shown in the last article is in use, the pod is now authorized for both the “restricted” and “privileged” PSPs. Thus, the pod is allowed as long as it corresponds to “privileged” settings, even if it contradicts those “restricted” settings. If the pod contradicts both PSPs, it will be rejected. This means that an exception with more rights has been implemented for this pod using a more permissive PSP.

Testing and debugging

In theory, using PSPs sounds more complex than configuring security context settings, but the extra effort is still manageable. In practice, however, it turns out that the interplay of so many resources can make it surprisingly difficult to find answers to questions: Why is a pod not created or started? What is the reason for this? Why are the settings not the desired ones? Why isn’t the desired PSP being used? The answers to these and similar questions are distributed across the PSP, (cluster) role, (cluster) role binding, service account, pod, and, if necessary, the additional pod controller.

Before starting the actual troubleshooting, it is advisable to check for the pitfalls mentioned in the last article:

- The PSP Admission Controller must be activated on the API server, or otherwise the PSPs will not be evaluated.

- This admission controller processes pods at the point in time when their execution is requested by the API server. Pods that are already running will not be checked again. If the Admission Controller is activated later, a PSP is created, re-authorized or changed, the PSP will not be re-evaluated for running pods.

- The API group that is specified in the (cluster) roles matches the K8s version used:

- Versions earlier than 1.16:

“apiGroups: [extensions]” - Versions later than 1.16:

“apiGroups: [policy]”

If these requirements are met but the problem still persists, then you can start the actual troubleshooting phase. If you prefer a graphical depiction, you can find it online (Troubleshooting Kubernetes PodSecurityPolicies ).

- After all resources have been applied to the cluster and running pods have been deleted (if necessary) it makes sense to first check whether the actual pods were created and launched in the first place:

“kubectl get pods” - As mentioned, pods are mostly managed using a controller. Examples of controllers are Deployment, StatefulSet, or CronJob. If you don’t see the pods you want, then you should continue troubleshooting the controller. If you were not able to create the pods, then you will see a warning message in the controller events, which can be queried as follows:

“kubectl describe <controller>” - In the case of deployments, please note that the pods are managed using ReplicaSets. Therefore, the error message is also displayed in the ReplicaSet and not in the deployment itself. The cause of an error at this point can be that the pod’s service account is not authorized for any PSP or that the pod’s security settings do not correspond to any of the authorized PSPs. Example: In the security context, capabilities were explicitly requested that are not permitted by any PSP.

- If the pods are created but do not start, this is probably due to the fact that the pods cannot be executed with the security settings specified by the PSP. First of all, it should be clarified which PSP is actually used on the pod:

“kubectl get pod <pod> -o jsonpath='{.metadata.annotations.kubernetes\.io/psp}'”. If the desired PSP has not been used, it helps to check whether the pod is authorized for the PSP. Thus, the following procedure is conceivable: - Was the right service account assigned? If not, then please correct.

“kubectl get pod <pod> -o jsonpath='{.spec.serviceAccountName}'” - Otherwise: Is the service account authorized for the desired PSP?

“kubectl auth can-i use psp/<psp> --as=system:serviceaccount:<namespace>:<serviceAccount>”This checks whether the RBAC settings are as expected, i.e., whether the connection between (cluster) role, (cluster) role binding, and service account is correct (see illustration 1). The coupling between these resources is loose. This means that when it is created, it is not checked whether, for example, a service account or a role even exists. A typo here is enough for the privileges not being assigned as expected. At the earliest, this typo is detected only at startup of the pod. However, the connection between error message and typo in the role binding is not obvious. An overview of who is assigned which roles can help here. The Kubectl plugin RBAC Lookup offers a clear presentation. Following installation, “kubectl rbac-lookup” offers a table with entries showing the subject (for example, the service account), scope (name of the namespace or cluster-wide), and role. The other view of who has access to which PSP can be captured using the Kubectl plugin who-can. The syntax is as follows:“kubectl who-can use psp <psp>”. If the service account is authorized for the PSP, but it is not used, then this indicates that other PSPs are authorized. Here it is important to check whether and how it can work with the PSP sequence of the admission controller described above. - If the desired PSP has been used and the container has the status

“CrashLoopBackOff”, it can help to take a look at the logs:“kubectl logs <pod>”Here you can find information about missing privileges, capabilities, etc. If the status is“CreateContainerConfigError”, then“kubectl describe <pod>”can help. Here again the warning message in the events can provide information, for example, if the rule “runAsNonRoot” is enabled but a container should be started as the “root” user. In both cases the settings have to be changed so that the pod is allowed by the PSP. For example, the user ID can be set using the SecurityContext, volumes can be mounted (to make temporary directories writable in read-only file systems), or another image can be selected that can start without capabilities. An alternative is the use of a more permissive PSP as described above.

Once the pods have started, it makes sense to perform a final manual check of the effective settings. Listing 4 shows how some of the values that are active in the container are queried. However, this requires a container that contains a shell. If the application container is delivered without a shell (which is recommended for security reasons), a temporary pod can help. In this container, commands from Listing 4 can be executed interactively without “kubectl exec”. The pod can also be started with a specific service account (Kubectl version 1.18):

“kubectl run tmp --rm -ti --image debian:buster --overrides='{"spec": {"serviceAccountName": "privileged"}}' -- /bin/bash”

# Aktive Capabilities im Container abfragen:

capsh --decode="$(kubectl exec <pod> cat /proc/1/status | grep CapBnd| cut -d':' -f2)"

# Wo “capsh” nicht verfügbar ist (z.B. Windows, Mac) kann mittels Docker abgeholfen werden:

docker run --rm --entrypoint capsh debian:buster \

--decode="$(kubectl exec <pod>-- cat /proc/1/status | grep CapBnd| cut -d':' -f2)"

# Prüfung, ob seccomp aktiv ("2" heißt aktiv)

kubectl exec <pod> cat /proc/1/status | grep Seccomp

# Prüfung, ob Privilege Escalation möglich

kubectl exec <pod> -- cat /proc/1/status | grep NoNewPrivs

# Einfacher Test zum Schreiben in ein read-only Filesystem

kubectl exec <pod> -- touch a

# touch: cannot touch 'a': Read-only file system

# Environment eines Containers abfragen

kubectl exec <pod> -- env

# User eines Containers abfragen

kubectl exec <pod> -- id

Listing 4: Check the effective settings in the container

Recommendation for the use of PSPs

In summary, it can be said that it definitely makes sense to use PSPs, since they help boost the security of the cluster. This is particularly true with Kubernetes, since many default values were chosen more with a view to compatibility than security. This can be effectively optimized in many places using PSPs. When setting up a new cluster, it is advisable to start directly with a restrictive PSP. It is more difficult to implement a PSP retrospectively in a productive cluster. Nevertheless, you can still optimize security this way, but it will also require additional effort. Here it is advisable to first try out the settings successively for all pods in the cluster using the security context. You can check whether and how pods can be executed with the settings. If the settings work with all pods, then the PSP can be implemented with less risk and effort, and security can be improved across the cluster for future applications.

Conclusion

The previous article describes how the standard settings in Kubernetes can be made more secure using PSPs, since they can restrict privileges. This article shows solutions to the challenges that arise as a result. In practice, it may occur that images cannot be executed with a reasonable amount of effort using the settings specified by a cluster-wide PSP. In that case, pods can be excluded from the generally applicable PSP. This can be achieved through additional, less restrictive PSPs, for which individual pods can be authorized if necessary. Since PSPs are authorized using RBAC, many loosely coupled Kubernetes resources must interact with each other to be used successfully: the PSP, (cluster) Role, (cluster) RoleBinding, service account, pod, and, if necessary, the additional pod controller. This is why troubleshooting can be challenging. This article offers a troubleshooting guide for these situations. Despite everything, this article recommends the use of PSPs. In particular, if they are used from the start, they increase the general security of all applications in the cluster with a manageable amount of effort. To conclude this series of articles, I would like to thank my colleagues from Cloudogu GmbH. On the one hand, I would like to express my gratitude to all of my colleagues in the Marketing Department for their support with organizational matters, proofreading, creating graphics, and translation. On the other hand, I would like to express my special thanks to Sebastian Sdorra for the many constructive content-related suggestions regarding each individual article.

Download this article (PDF)

You can download the original article (German), published in JavaSPEKTRUM 04/2020.

This article is part 6 of the series „Kubernetes AppOps Security“.

Read all articles now: