GitOps Repository Structures and Patterns Part 6: Example Repositories

This article is part 6 of the series „GitOps Repository Structures and Patterns“

Read the first part now.

In this final part of the GitOps repository structures and patterns series, I show example repositories that provide templates, ideas and tips for your own projects. Some recurring themes emerge, some of which are named differently: Structures for applications or teams, structures for cluster-wide resources, and structures for bootstrapping.

The examples also show that in principle, hardly any differences between Argo CD and Flux are necessary regarding repo structures. These are limited to bootstrapping and linking. Since Kustomize is understood by both Argo CD and Flux via kustomization.yaml, it turns out to be an operator-agnostic tool.

You can get an overview of GitOps repository patterns and structures in the first part of this series, in the second part I introduce operator deployment patterns and in the third part repository patterns, in the fourth part promotion patterns and in the fifth part wiring patterns.

Example 1: Argo CD Autopilot

- Repo pattern: Monorepo

- Operator pattern: “Instance per Cluster” or “Hub and Spoke”

- Operator: Argo CD

- Boostrapping:

argocd-autopilotCLI - Linking:

Application,ApplicationSet, Kustomize - Features:

- Automatic generation of the structure and YAML via CLI

- Manage Argo CD itself via GitOps

- Solution for cluster-wide resources

- Source: argoproj-labs/argocd-autopilot

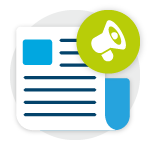

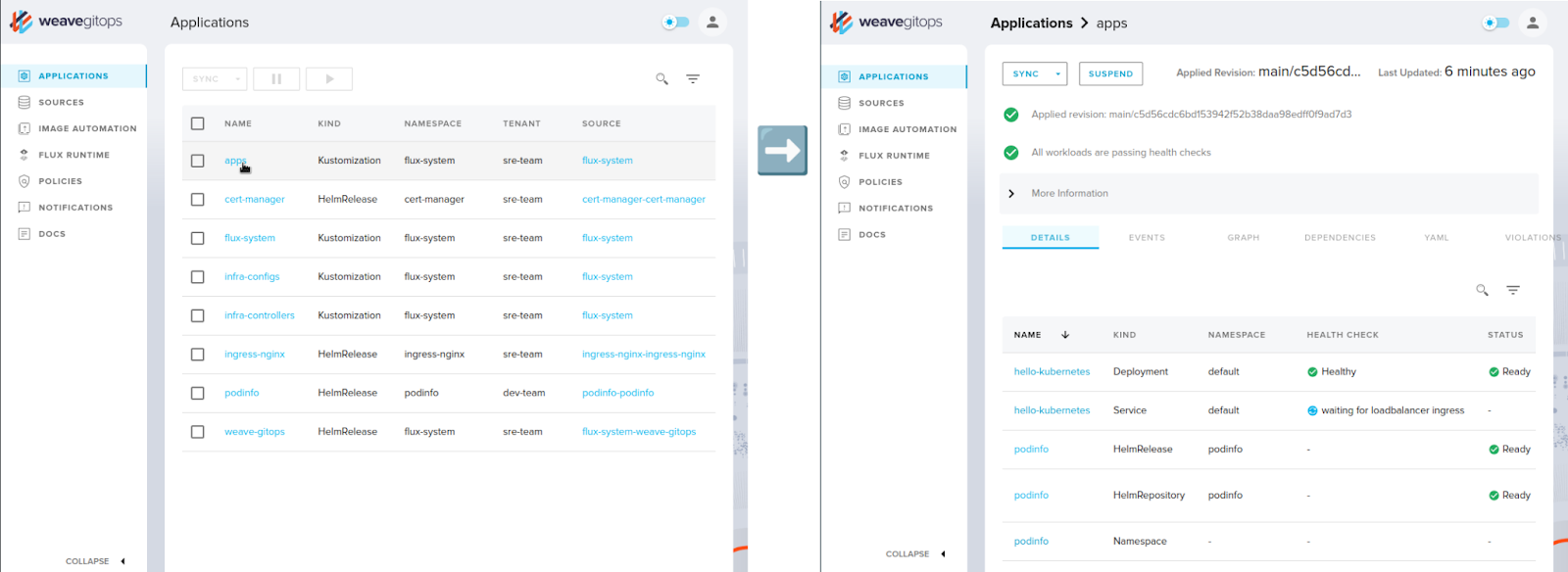

Figure 1: Repo structure for argocd-autopilot

Figure 1: Repo structure for argocd-autopilot

Argo CD autopilot is a command line interface (CLI) tool that is intended to simplify installation and getting started with Argo CD. To this end, it provides the ability to bootstrap Argo CD in the cluster and to create repo structures.

The bootstrapping of Argo CD is done with a single command: argocd-autopilot repo bootstrap. In order to also deploy applications with the resulting structure, an AppProject (command project create) and an Application (command app create) are also required. Figure 1 shows the resulting repo structure. This structure can be viewed at GitHub at schnatterer/argocd-autopilot-example. Contexts are described below using the numbers in the figure:

- the

Applicationautopilot-bootstrapmanages the folderbootstrapand thus includes all otherApplications in this enumeration. It itself is not under version control, but is imperatively applied to the cluster during bootstrapping. - the

Application argo-cdmanages Argo CD itself via GitOps. - To this end, it contains a Kustomization which includes additional resources from the internet. It directly references a kustomization in the repo of autopilot, which in turn fetches all resources necessary for installing Argo CD from the repo of Argo CD itself, by pointing to the

stablebranch of Argo CD. - The

ApplicationSet cluster-resourcesreferences all JSON files under the pathbootstrap/cluster-resources/using git generator for files. This can be used to manage cluster-wide resources, such as namespaces used by multiple applications. By default, only thein-cluster.jsonfile is located here, which contains values for the name and server variables. In theApplicationSettemplate, these variables are used to create anApplicationthat references the manifests underneathbootstrap/cluster-resources/in-cluster/. This creates the namespaceargocdin the cluster where Argo CD is deployed. This is suitable for theinstance per clusterpattern, but is extensible to other clusters to implement the hub and spoke pattern. - The application

rootis responsible for including allAppProjectsandApplications that are created belowprojects/. After executing thebootstrapcommand, this folder is still empty. - Each time the

projectcommand is executed, anAppProjectand associatedApplicationSetare generated in a file. These are intended for the implementation of different environments. TheApplicationSetreferences allconfig.jsonfiles located in subfolders of theappsfolder for the respective environment, for exampleapps/my-app/overlays/staging/config.json, using the git generator for files. However, theappsfolder is initially empty and no applications are generated. - By executing the command

app, the folderappsis filled with the structure for anApplicationin an environment. This includes theconfig.jsondescribed in the last point, by means of which theApplicationSetlocated in theprojectsfolder generates anApplicationthat deploys the folder itself, for exampleapps/my-app/overlays/staging. This folder can be used to deploy config that is specific to an environment. - In addition, a

kustomization.yamlis created pointing to the base folder. This folder can be used to deploy config that is the same in all environments. By this separation, redundant Config is avoided.

Analogously to 6. to 8. further environments can be added.

Figure 1 shows a subfolder production in the folders apps and a YAML file in projects.

Finally, it should be mentioned that there are reasons to be cautious when using Autopilot in production. The project does not describe itself as stable, it is still in a “0.x” version. It is also not part of the official “argoproj” organization at GitHub, but is under “argoproj-labs”. The commits come mainly from one company: Codefresh. So it is conceivable that the project will be discontinued or breaking changes will occur. This makes it inadvisable to use it in production.

Also, by default, the Argo CD version is not pinned. Instead, the kustomization.yaml (3. in Figure 1) ultimately references the stable branch of the Argo CD repo. Here we recommend to reference a deterministic version via kustomize. A non-deterministic version screams trouble: Upgrades from Argo CD could go unnoticed. What about breaking changes in Argo CD? Which version does one restore in a disaster recovery case?

The repository structure that Autopilot creates is complicated, i.e. difficult to understand and maintain. The amount of concentration required to understand Figure 1 and its description speaks for itself. In addition, there are less obvious issues: Why is the autopilot-bootstrap application (1. in Figure 1) not in the GitOps repository, but only in the cluster?

The approach of an ApplicationSet inside AppProject’s YAML pointing to a config.json is hard to understand (4th and 6th in Figure 1). The mixture of YAML and JSON makes it worse.

The cluster-resources ApplicationSet is generally a well-scalable approach for managing multiple clusters via the hub and spoke pattern. However, JSON must be written here as well (4. in Figure 1).

Autopilot models environments via Argo CD Projects (6. and 7. in Figure 1). With this monorepo structure, how would it be feasible to separate different teams of developers? One idea would be to use multiple Argo CD instances according to the “instance per cluster” pattern. In this case, each team would have to manage its own Argo CD instance.

Many organizations like to outsource such tasks to platform teams and implement a repo per team pattern. This is not intuitive with Autopilot. The 2nd example shows an alternative for this.

Example 2: GitOps Playground

- Repo pattern: “Repo per team” mixed with “Repo per app”

- Operator pattern: Instance per Cluster (“Hub and Spoke” also possible)

- Operator: Argo CD (Flux also possible)

- Boostrapping:

helm,kubectl - Linking:

Application - Features:

- Manage Argo CD itself via GitOps

- Solution for cluster-wide resources

- Multi-tenancy: Central operator for multiple teams on a single cluster wither namespace environments (multiple clusters possible)

- Env per app Pattern

- Config update and config replication via CI-Server

- Mixed repo patterns

- Env per app Pattern

- Examples for Argo CD and Flux

- Source: cloudogu/gitops-playground

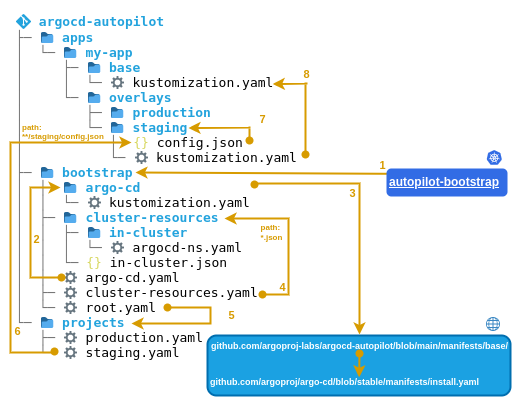

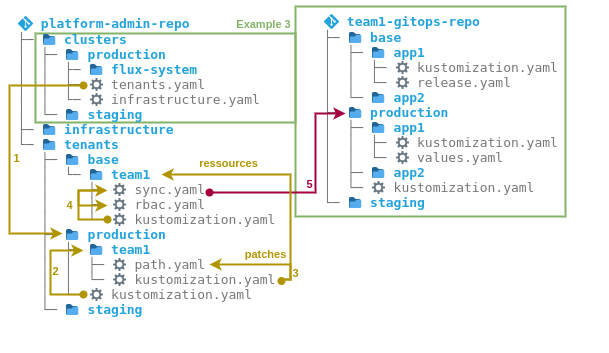

Figure 2: Relationship of the GitOps Repos in the GitOps Playground (Argo CD)

Figure 2: Relationship of the GitOps Repos in the GitOps Playground (Argo CD)

The GitOps Playground provides an OCI image that can be used to provision a Kubernetes cluster with everything needed for operation using GitOps and illustrates this using sample applications. Tools installed include GitOps operator, Git server, monitoring and secrets management. For the GitOps operator, you have the choice between Argo CD and Flux. In what follows, we focus on Argo CD because (unlike Flux) it does not itself make suggestions about repo structure. Also, there are fewer public examples of repo structures using Argo CD that are ready for production.

In the GitOps Playground, Argo CD is installed so that it can operate itself via GitOps. In addition, a “repo per team” pattern mixed with a “repo per app” pattern is implemented. Figure 2 shows how the GitOps repos are wired.

The GitOps Playground performs some imperative steps once for bootstrapping Argo CD during installation. In the process, three repos are created and initialized:

argocd(management and configuration of Argo CD itself).example-apps(example for the GitOps repository of a developer/application team) andcluster-resources(example for the GitOps repo of a cluster administrator or an infra/platform team).

Argo CD is installed once using a Helm chart. Here helm template is used internally. An alternative would be to use helm install or helm upgrade -i. Afterwards, however, the secrets in which Helm manages its state should be deleted. Argo CD does not use them, so they would become obsolete and only cause confusion.

To complete the bootstrapping, two resources are also imperatively applied to the cluster: an AppProject named argocd and an Application named bootstrap. These are also contained in the argocd repository.

From there, everything is managed via GitOps. The following describes the relationships using the numbers in the figure:

- the

Applicationbootstrapmanages theapplicationsfolder, which also containsbootstrapitself. This allows changes tobootstrapto be made via GitOps. Usingbootstrapother applications are deployed (App-of-Apps pattern). - the

Applicationargocdmanages the folderargocd, which contains the resources of Argo CD as an Umbrella Helm chart. Here, thevalues.yamlcontains the actual config of the Argo CD instance. Additional resources (for example secrets and Ingresses) can be deployed via thetemplatefolder. The actual Argo CD chart is declared in theChart.yaml. - the

Chart.yamlcontains the Argo CD Helm chart as a dependency. It references a deterministic version of the chart (pinned viaChart.lock) that is pulled from the chart repository on the Internet. This mechanism can be used to update Argo CD via GitOps. - the

Applicationprojectsmanages theprojectsfolder, which in turn contains the followingAppProjects:argocd, which is used for bootstrapping,default, which is built-in to Argo CD (whose permissions are restricted from the default behavior to reduce the attack surface (siehe Argo CD End User Threat Model)),- an

AppProjectper team (to implement least privilege and notifications per team):cluster-resources(for platform admins, needs more privileges on the cluster) andexample-apps(for developers, needs fewer privileges on the cluster).

- the

Applicationcluster-resourcespoints to theargocdfolder in thecluster-resourcesrepo. This repo has the typical folder structure of a GitOps repo (explained in the next step). This way administrators use GitOps in the same way as their “customers” (the developers) and can provide better support. - the

Applicationexample-appspoints to theargocdfolder in theexample-appsrepo. Like thecluster-resourcesit also has the typical folder structure of a GitOps repo:apps- contains Kubernetes resources of all applications (the actual YAML).argocd- contains Argo CDApplications pointing to subfolders of apps (App Of Apps pattern).misc- contains Kubernetes resources that do not belong to specific applications (for example, namespaces and RBAC)

- the

Applicationmiscpoints to the foldermisc. - the

Applicationmy-app-stagingpoints to theapps/my-app/stagingfolder within the same repo. This provides a folder structure for promotion. TheApplications with themy-app-prefix implement the “environment per app” pattern. This allows each application to use individual environments, e.g.productionandstagingor none at all. The actual YAML can be pushed here either manually or automated. The GitOps Playground contains examples that implement the config update via CI server based on an app repo. This approach is an example of mixing the “repo per team” and “repo per app” patterns. - the associated production environment is implemented via the

my-app-productionapplication, which points to theapps/my-app/productionfolder within the same repo. In general, it is recommended to protect allproductionfolders from manual access if this is possible on the part of the SCM used (e.g. with SCM-Manager). Instead of the different YAML files used in the diagram, theseApplications could also be implemented as follows- two

Applications in the same YAML - two

Applications with the same name in different Kubernetes namespaces. It is necessary that these Namespaces are configured in Argo CD. - an

ApplicationSetthat uses the git generator for folders.

- two

The GitOps playground itself uses a single Kubernetes cluster for simplicity, implementing the “instance per cluster” pattern. However, the repo structure shown can also be used for multiple clusters using the “hub and spoke” pattern: Additional clusters can be defined either in vaules.yaml or as secrets using the templates folder.

Example 3: Flux Monorepo

- Repo pattern: Monorepo

- Operator pattern: Instance per Cluster

- Operator: Flux (could be implemented similarly with Argo CD)

- Boostrapping:

fluxCLI - Linking: Flux

Kustomization, Kustomize - Features:

- Manage Flux itself via GitOps

- Solution for cluster-wide resources

- Source: fluxcd/flux2-kustomize-helm-example

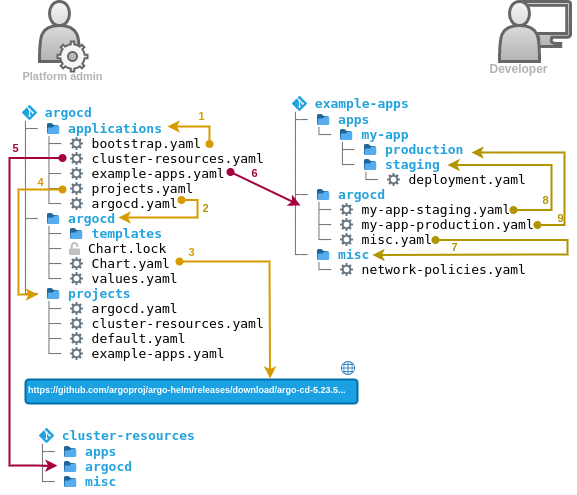

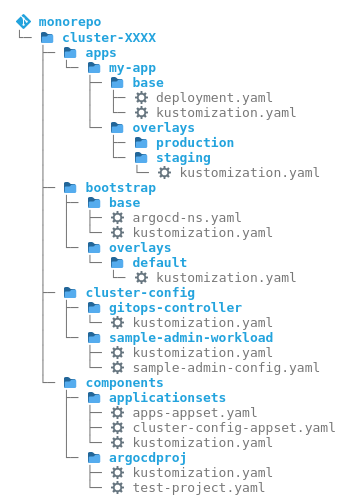

Figure 3: Repo structure of flux2-kustomize-helm-example

Figure 3: Repo structure of flux2-kustomize-helm-example

After these non-trivial examples in the context of Argo CD, our practical insight into the world of Flux begins with a positive surprise: No preliminary considerations or external tools are required for installation here. The Flux CLI brings a bootstrap command that performs bootstrapping of Flux in the cluster as well as the creation of repo structures. Additionally, Flux provides official examples that implement various patterns. We begin with the monorepo. Figure 3 shows the relationships. In the following, we describe them using the numbers in the figure:

- the

flux bootstrapcommand generates all the resources needed to install Flux into theflux-systemfolder, as well as a Git repository and aKustomization. For bootstrapping,fluximperatively applies these once to the cluster. TheKustomizationthen references its own parent folderproduction. From here on, everything is managed via GitOps. - the

flux-systemKustomizationalso deploys anotherKustomizationinfrastructurepointing to the folder of the same name. This can be used to deploy cluster-wide resources such as ingress controllers and network policies. - In addition,

flux-systemdeploys theKustomizationapps. This points to the subfolder of the respective environment under apps, for exampleapps/production. - in this folder there is a

kustomization.yaml, which is used to include the folders of all applications. - In the folder of each application there is another

kustomization.yaml, which collects the actual resources for each application in an environment: As a basis, typical for Kustomize, there is a subfolder ofbase(for exampleapps/base/app1). This contains config that is the same in all environments. In addition, there is config that is specific to each environment. This is overlaid on top ofbaseusing patches from the environment’s respective folder (for example,apps/production/app1).

Analogously to the apps folder, there is also one folder per environment in the clusters folder. So here Flux implements an instance per cluster pattern: one Flux instance per environment.

The public example itself only shows the management of a single application, and it is not obvious how more are added. Our real-world experience is that one Flux instance usually manages multiple applications. Hence, figure 3 shows an extended variant that supports multiple applications, based on insights gained from discussion in this issue. This structure can also be viewed and tried on GitHub at schnatterer/flux2-kustomize-helm-example.

This structure has the disadvantage that all applications under apps are deployed from a single Kustomization per environment. For example, when using the graphical interface of Weave GitOps, all resources contained therein are then displayed as one “app” on the interface (see Figure 4). This quickly becomes confusing. Analogous to the use of Applications with Argo CD (see previous examples), it is also conceivable to create one Kustomization per application, instead of a single Kustomization in the apps.yaml file. This requires more maintenance, but provides a clearer structure.

Figure 4: Multiple applications in one

Figure 4: Multiple applications in one Kustomization (screenshot Weave GitOps)

The repo structure described here would also work for Argo CD with a few changes. Instead of the Kustomizations, Applications would have to be used for linking. The kustomization.yamls are understood by both tools.

Example 4: Flux repo per team

- Repo pattern: Repo per team

- Operator pattern: Instance per Cluster

- Operator: Flux (could be implemented similarly with Argo CD)

- Boostrapping:

fluxCLI - Linking: Flux

Kustomization, Kustomize - Features:

- same as example 3

- Multi-tenancy: One operator per cluster environment manages multiple teams

- Source: fluxcd/flux2-multi-tenancy

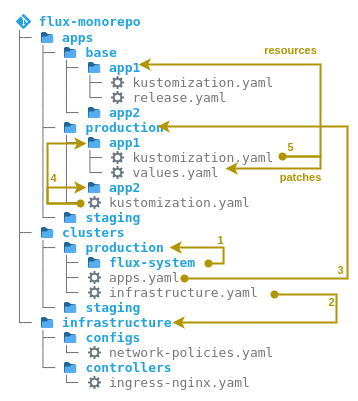

Figure 5: Relationship of the GitOps repos at flux2-multi-tenancy

Figure 5: Relationship of the GitOps repos at flux2-multi-tenancy

If you prefer to use one repo per team for your organization, you will find an official example in the Flux project. In Flux, this is referred to as “multi-tenancy”. Instead of the more general term “tenant”, we use the term “team” here, which fits the pattern.

Some points are already known from the previous example. These include bootstrapping using the clusters folder and the cluster-wide resources in the infrastructure folder. Figure 5 shows the relationships. In the following, these are described using the numbers in the figure:

- the

Kustomizationflux-systemdeploys aKustomizationtenantspointing to the foldertenants/production. - in this folder there is a

kustomization.yamlwhich is used to include the folders of all teams in an environment, for exampletenants/production/team1. - in the folder of each team there is another

kustomization.yamlwhich collects resources for each team in an environment. As a basis, typical for Kustomize, there is a subfolder ofbase(for exampletenants/base/team1). This contains config that is the same in all environments. In addition, there is config that is specific to each environment. This is overlaid on thebaseusing patches from the environment’s respective folder (for example,tenants/production/team1). - concretely, there can be several resources in the base folder, which are joined together via yet another

kustomization.yaml. - the team repo is included via the

sync.yamlfile, which contains aGit Repositoryand yet anotherKustomization. Specific for each environment is then only the path in the team repo, which is overlaid by means of a patch from the respective folder of the environment, for exampletenants/production/team1/path.yaml.

The structure of the team repo then corresponds exactly to that of the app folder from the previous example. This structure can also be viewed and tried out on GitHub at schnatterer/flux2-multi-tenancy. As with this example, the disadvantage is that all applications in the team repo are deployed from one Kustomization per environment and this becomes confusing.

Example 5: The Path to GitOps

- Repo pattern: Monorepo

- Operator pattern: Instance per Cluster

- Operator: Argo CD (or Flux)

- Boostrapping:

kubectl - Linking:

Application,ApplicationSet, Kustomize - Features:

- Solution for cluster-wide resources

- Env per app pattern

- Examples for Argo CD and Flux

- Source: christianh814/example-kubernetes-go-repo

Figure 6: The repo structure at example-kubernetes-go-repo

Figure 6: The repo structure at example-kubernetes-go-repo

In his book “The Path to GitOps”, Christian Hernandez, who has been involved with GitOps for years at Akuity, RedHat and Codefresh, devotes a chapter to the topic of repo and folder structures. Figure 6 shows his example of a monorepo. It can also be viewed on GitHub, for both Argo CD and Flux. Some of the folder names differ between the book and the repo on GitHub. This fits with a tip from the book that the names of the repos are not important, but the concepts they represent.

In this example, a lot of what is already known in the previous examples can be found again:

- There is a folder

appsfor applications. In the repo at GitHub, this is calledtenants, so here it is related to teams as in example 4. - For cluster-wide resources, there is a

cluster-configfolder, which is calledcorein the repo at GitHub. - In the folder

bootstrapthe bootstrapping of the operator is implemented. Here, similar to Autopilot (see example 1), Argo CD is installed directly from the public Argo CD repo over the Internet using Kustomize.

To avoid repetition, the relationships of the repo structure will not be described in detail here. However, the following points are interesting.

- This repo splits the config of the operator into two folders: The already known

bootstrapfolder and acomponentsfolder. - In addition, the entire structure is in a folder

cluster-XXXX, which suggests that the entire structure including Argo CD refers to a cluster. So here the instance is implemented by cluster pattern. - For promotion, an “Env per app” pattern is implemented here via Kustomize, see the

appsfolder in figure 6. This is described in the book, but is not implemented in the GitHub repo.

As mentioned, the same repo structure is also available for Flux. It is generally interesting that the same structure can be used with minor changes for both Argo CD and Flux. However, for Flux it is recommended to use the structure of the flux bootstrap command instead. Since this is implemented in Flux, it can be considered good practice for Flux. It makes it easier to understand and maintain, for example when updating Flux.

Example 6: Environment-Varianten

- Operator: Argo CD (in principle also Flux)

- Linking: Kustomize

- Features:

- Different variants of the environments of an app

- Promotion by copying individual files

- Source: kostis-codefresh/gitops-environment-promotion

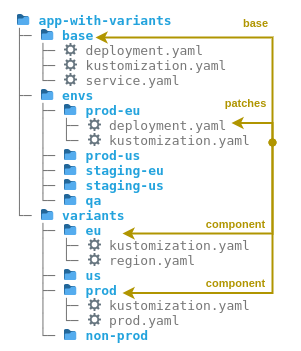

Figure 7: The folder structure for gitops-environment-promotion

Figure 7: The folder structure for gitops-environment-promotion

This last example differs from the previous ones in that it does not describe the structure of an entire repo, but only that of a single application. It can therefore be combined with the other examples. This example focuses on the implementation of a large number of environments. It shows how different environments (integration, load, prod, qa and staging), can be rolled out in different regions (asia, eu, us) without creating much redundant config. In total there will be 11 environments. In each environment a distinction should be made between prod and non-prod. This shows that Kustomize is well suited to implement such an extensive structure without redundancies. Although the example comes from the environment of Argo CD it can be used without changes also with Flux, since the linking is accomplished exclusively by kustomization.yaml.

Figure 7 shows the structure simplified to five environments. The starting point is the subfolders of an environment in the envs folder, for example envs/prod-eu. These subfolders would be included by Argo CD Application or Flux Kustomization. In each subfolder there is a kustomization.yaml, which uses the base folder as a basis, which contains identical config in all environments. The config of the variants is placed in subfolders of variants, for example eu and prod. In addition, the config specific to each environment is added by means of patches from the respective subfolder of env, for example envs/prod-eu.

In principle, this example could also be implemented by Helm, but this would be more complicated, and it would require the use of special CRDs, instead of universal kustomization.yaml.

This example also provides an idea for simplifying the promotion: in each folder there are many YAML files, one file per property. The advantage is that the promotion can then be done by simply copying one file. There is no need to cut and paste text, which simplifies the process and reduces the risk of errors. Also, the diffs are easier to read.

Conclusion

Using recurring elements of existing GitOps tools, this series of articles describes GitOps patterns and classifies them into the four categories of operator deployment, repository, promotion and wiring. On the one hand, the patterns provide an overview of the options for design decisions for the GitOps process and for the structure of the associated repositories and what needs to be taken into account. On the other hand, they can help to find terms for the respective patterns, standardize them and facilitate communication.

This final part of the series describes practical examples of the patterns. They provide templates, ideas and tips for your own projects. Some recurring themes emerge, some of which are named differently: Structures for applications or teams, structures for cluster-wide resources and structures for bootstrapping.

The examples also show that in principle there are hardly any differences between Argo CD and Flux when it comes to structures. These are limited to bootstrapping and linking. Since Kustomize is understood by both Argo CD and Flux by means of kustomization.yaml, it turns out to be an operator-agnostic tool.

Much of what is described here can be easily tried out with the GitOps Playground shown in example 2.

This article is part 6 of the series „GitOps Repository Structures and Patterns“.

Read all articles now: